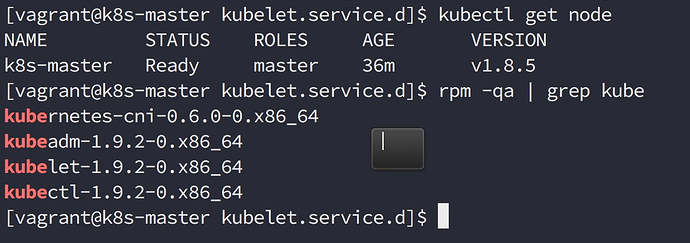

节点状态和k8s版本信息

[vagrant@k8s-master ~]$ kubectl get node

NAME STATUS ROLES AGE VERSION

k8s-master Ready master 4m v1.8.5

[vagrant@k8s-master ~]$

pod 状态

[vagrant@k8s-master ~]$ kubectl get po -n kube-system

NAME READY STATUS RESTARTS AGE

etcd-k8s-master 1/1 Running 0 1m

kube-apiserver-k8s-master 1/1 Running 0 1m

kube-controller-manager-k8s-master 1/1 Running 0 2m

kube-dns-566dc99866-7txk5 0/3 ContainerCreating 0 2m

kube-flannel-ds-d47g2 1/1 Running 0 2m

kube-proxy-pv9rw 1/1 Running 0 2m

kube-scheduler-k8s-master 1/1 Running 0 2m

kubernetes-dashboard-f49bbf6d9-dhgj7 0/1 ContainerCreating 0 2m

kube-dns pod 事件:

[vagrant@k8s-master ~]$ kubectl describe po kube-dns-566dc99866-7txk5 -n kube-system

Name: kube-dns-566dc99866-7txk5

Namespace: kube-system

Node: k8s-master/192.168.119.101

Start Time: Thu, 13 Sep 2018 07:02:55 +0000

Labels: k8s-app=kube-dns

pod-template-hash=1228755422

Annotations: kubernetes.io/created-by={"kind":"SerializedReference","apiVersion":"v1","reference":{"kind":"ReplicaSet","namespace":"kube-system","name":"kube-dns-566dc99866","uid":"c080c790-b722-11e8-836b-5254005f...

Status: Pending

IP:

Created By: ReplicaSet/kube-dns-566dc99866

Controlled By: ReplicaSet/kube-dns-566dc99866

Containers:

kubedns:

Container ID:

Image: registry.cn-hangzhou.aliyuncs.com/google_containers/k8s-dns-kube-dns-amd64:1.14.5

Image ID:

Ports: 10053/UDP, 10053/TCP, 10055/TCP

Args:

--domain=cluster.local.

--dns-port=10053

--config-dir=/kube-dns-config

--v=2

State: Waiting

Reason: ContainerCreating

Ready: False

Restart Count: 0

Limits:

memory: 170Mi

Requests:

cpu: 100m

memory: 70Mi

Liveness: http-get http://:10054/healthcheck/kubedns delay=60s timeout=5s period=10s #success=1 #failure=5

Readiness: http-get http://:8081/readiness delay=3s timeout=5s period=10s #success=1 #failure=3

Environment:

PROMETHEUS_PORT: 10055

Mounts:

/kube-dns-config from kube-dns-config (rw)

/var/run/secrets/kubernetes.io/serviceaccount from kube-dns-token-bqbpk (ro)

dnsmasq:

Container ID:

Image: registry.cn-hangzhou.aliyuncs.com/google_containers/k8s-dns-dnsmasq-nanny-amd64:1.14.5

Image ID:

Ports: 53/UDP, 53/TCP

Args:

-v=2

-logtostderr

-configDir=/etc/k8s/dns/dnsmasq-nanny

-restartDnsmasq=true

--

-k

--cache-size=1000

--log-facility=-

--server=/cluster.local/127.0.0.1#10053

--server=/in-addr.arpa/127.0.0.1#10053

--server=/ip6.arpa/127.0.0.1#10053

State: Waiting

Reason: ContainerCreating

Ready: False

Restart Count: 0

Requests:

cpu: 150m

memory: 20Mi

Liveness: http-get http://:10054/healthcheck/dnsmasq delay=60s timeout=5s period=10s #success=1 #failure=5

Environment: <none>

Mounts:

/etc/k8s/dns/dnsmasq-nanny from kube-dns-config (rw)

/var/run/secrets/kubernetes.io/serviceaccount from kube-dns-token-bqbpk (ro)

sidecar:

Container ID:

Image: registry.cn-hangzhou.aliyuncs.com/google_containers/k8s-dns-sidecar-amd64:1.14.5

Image ID:

Port: 10054/TCP

Args:

--v=2

--logtostderr

--probe=kubedns,127.0.0.1:10053,kubernetes.default.svc.cluster.local,5,A

--probe=dnsmasq,127.0.0.1:53,kubernetes.default.svc.cluster.local,5,A

State: Waiting

Reason: ContainerCreating

Ready: False

Restart Count: 0

Requests:

cpu: 10m

memory: 20Mi

Liveness: http-get http://:10054/metrics delay=60s timeout=5s period=10s #success=1 #failure=5

Environment: <none>

Mounts:

/var/run/secrets/kubernetes.io/serviceaccount from kube-dns-token-bqbpk (ro)

Conditions:

Type Status

Initialized True

Ready False

PodScheduled True

Volumes:

kube-dns-config:

Type: ConfigMap (a volume populated by a ConfigMap)

Name: kube-dns

Optional: true

kube-dns-token-bqbpk:

Type: Secret (a volume populated by a Secret)

SecretName: kube-dns-token-bqbpk

Optional: false

QoS Class: Burstable

Node-Selectors: <none>

Tolerations: CriticalAddonsOnly

node-role.kubernetes.io/master:NoSchedule

node.alpha.kubernetes.io/notReady:NoExecute for 300s

node.alpha.kubernetes.io/unreachable:NoExecute for 300s

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Warning FailedScheduling 1m (x8 over 2m) default-scheduler No nodes are available that match all of the predicates: NodeNotReady (1).

Normal Scheduled 24s default-scheduler Successfully assigned kube-dns-566dc99866-7txk5 to k8s-master

Normal SuccessfulMountVolume 24s kubelet, k8s-master MountVolume.SetUp succeeded for volume "kube-dns-config"

Normal SuccessfulMountVolume 24s kubelet, k8s-master MountVolume.SetUp succeeded for volume "kube-dns-token-bqbpk"

Warning FailedCreatePodSandBox 23s kubelet, k8s-master Failed create pod sandbox.

Warning FailedSync 4s (x4 over 23s) kubelet, k8s-master Error syncing pod

Normal SandboxChanged 4s (x3 over 21s) kubelet, k8s-master Pod sandbox changed, it will be killed and re-created.

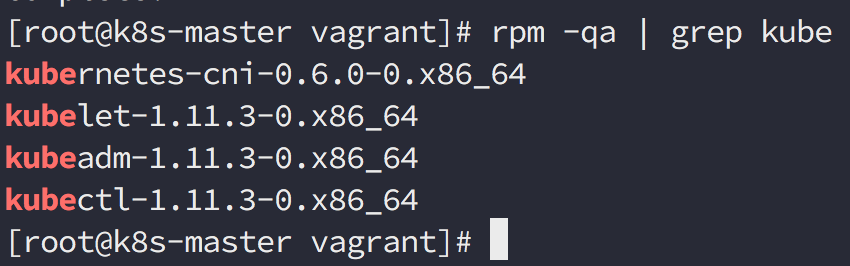

网上说是因为 kubenertes-cni 0.5.1 没有 portmap 插件引起的,不过 kubeadm-ansible 也是用的1.8.5, 它是如何部署的呢?