-

Choerodon平台版本: 0.18.0

-

遇到问题的执行步骤:

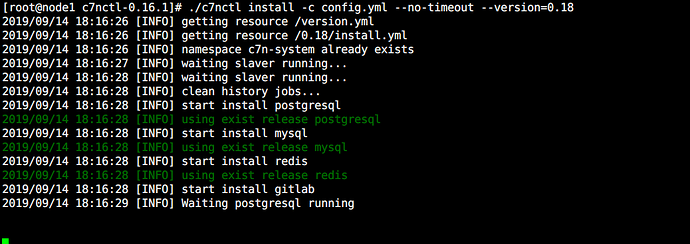

执行完此命令 ./c7nctl install -c config.yml --no-timeout --version=0.18

就一直停留在Waiting postgresql running -

文档地址:http://choerodon.io/zh/docs/installation-configuration/steps/install/choerodon/

-

环境信息(如:节点信息):

-

报错日志:

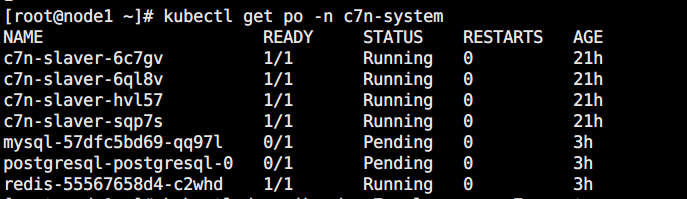

2、kubectl get po -n c7n-system

mysql 和 postgresql 一直在pending状态

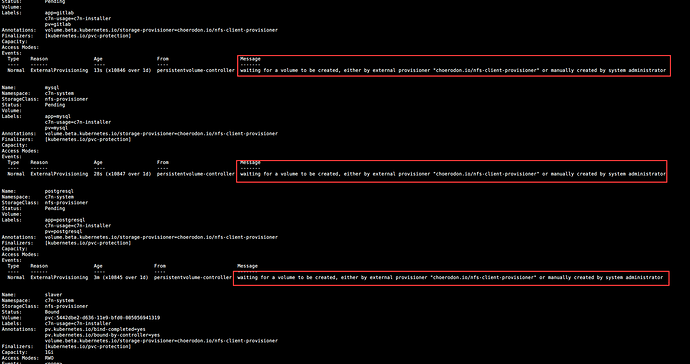

3、

[root@node1 ~]# kubectl describe po -n c7n-system

Name: c7n-slaver-6c7gv

Namespace: c7n-system

Node: node4/10.213.234.184

Start Time: Fri, 13 Sep 2019 22:54:05 +0800

Labels: app=c7n-slaver

c7n-usage=c7n-installer

controller-revision-hash=2464478952

pod-template-generation=1

Annotations: <none>

Status: Running

IP: 10.233.66.5

Controlled By: DaemonSet/c7n-slaver

Containers:

c7n-slaver:

Container ID: docker://2cae1aa85f8cb182ba0a05381803abd251ae56df77a49c572d3fadf36b275cb4

Image: registry.cn-hangzhou.aliyuncs.com/choerodon-tools/c7n-slaver:0.1.1

Image ID: docker-pullable://registry.cn-hangzhou.aliyuncs.com/choerodon-tools/c7n-slaver@sha256:6ca1400dd1c021af6fa5ce1b846fea6142b93f8857df2114fa04a63e3c9f73a4

Ports: 9000/TCP, 9001/TCP

Host Ports: 0/TCP, 0/TCP

State: Running

Started: Sat, 14 Sep 2019 11:03:06 +0800

Ready: True

Restart Count: 0

Environment: <none>

Mounts:

/data from data (rw)

/var/run/secrets/kubernetes.io/serviceaccount from default-token-7f78p (ro)

Conditions:

Type Status

Initialized True

Ready True

PodScheduled True

Volumes:

data:

Type: PersistentVolumeClaim (a reference to a PersistentVolumeClaim in the same namespace)

ClaimName: slaver

ReadOnly: false

default-token-7f78p:

Type: Secret (a volume populated by a Secret)

SecretName: default-token-7f78p

Optional: false

QoS Class: BestEffort

Node-Selectors: <none>

Tolerations: node.kubernetes.io/disk-pressure:NoSchedule

node.kubernetes.io/memory-pressure:NoSchedule

node.kubernetes.io/not-ready:NoExecute

node.kubernetes.io/unreachable:NoExecute

Events: <none>

Name: c7n-slaver-6ql8v

Namespace: c7n-system

Node: node1/10.213.234.181

Start Time: Fri, 13 Sep 2019 22:54:05 +0800

Labels: app=c7n-slaver

c7n-usage=c7n-installer

controller-revision-hash=2464478952

pod-template-generation=1

Annotations: <none>

Status: Running

IP: 10.233.64.4

Controlled By: DaemonSet/c7n-slaver

Containers:

c7n-slaver:

Container ID: docker://7b8d12c14436e49252a33218ef1ffbbdd5c6c7e1d5961a547ec348be76f27db6

Image: registry.cn-hangzhou.aliyuncs.com/choerodon-tools/c7n-slaver:0.1.1

Image ID: docker-pullable://registry.cn-hangzhou.aliyuncs.com/choerodon-tools/c7n-slaver@sha256:6ca1400dd1c021af6fa5ce1b846fea6142b93f8857df2114fa04a63e3c9f73a4

Ports: 9000/TCP, 9001/TCP

Host Ports: 0/TCP, 0/TCP

State: Running

Started: Sat, 14 Sep 2019 11:03:09 +0800

Ready: True

Restart Count: 0

Environment: <none>

Mounts:

/data from data (rw)

/var/run/secrets/kubernetes.io/serviceaccount from default-token-7f78p (ro)

Conditions:

Type Status

Initialized True

Ready True

PodScheduled True

Volumes:

data:

Type: PersistentVolumeClaim (a reference to a PersistentVolumeClaim in the same namespace)

ClaimName: slaver

ReadOnly: false

default-token-7f78p:

Type: Secret (a volume populated by a Secret)

SecretName: default-token-7f78p

Optional: false

QoS Class: BestEffort

Node-Selectors: <none>

Tolerations: node.kubernetes.io/disk-pressure:NoSchedule

node.kubernetes.io/memory-pressure:NoSchedule

node.kubernetes.io/not-ready:NoExecute

node.kubernetes.io/unreachable:NoExecute

Events: <none>

Name: c7n-slaver-hvl57

Namespace: c7n-system

Node: node2/10.213.234.182

Start Time: Fri, 13 Sep 2019 23:00:13 +0800

Labels: app=c7n-slaver

c7n-usage=c7n-installer

controller-revision-hash=2464478952

pod-template-generation=1

Annotations: <none>

Status: Running

IP: 10.233.67.8

Controlled By: DaemonSet/c7n-slaver

Containers:

c7n-slaver:

Container ID: docker://35de5159b1187333fa7c42b86d1843f5f7a4adbf887e68ce1269586f90b8018e

Image: registry.cn-hangzhou.aliyuncs.com/choerodon-tools/c7n-slaver:0.1.1

Image ID: docker-pullable://registry.cn-hangzhou.aliyuncs.com/choerodon-tools/c7n-slaver@sha256:6ca1400dd1c021af6fa5ce1b846fea6142b93f8857df2114fa04a63e3c9f73a4

Ports: 9000/TCP, 9001/TCP

Host Ports: 0/TCP, 0/TCP

State: Running

Started: Sat, 14 Sep 2019 11:09:16 +0800

Ready: True

Restart Count: 0

Environment: <none>

Mounts:

/data from data (rw)

/var/run/secrets/kubernetes.io/serviceaccount from default-token-7f78p (ro)

Conditions:

Type Status

Initialized True

Ready True

PodScheduled True

Volumes:

data:

Type: PersistentVolumeClaim (a reference to a PersistentVolumeClaim in the same namespace)

ClaimName: slaver

ReadOnly: false

default-token-7f78p:

Type: Secret (a volume populated by a Secret)

SecretName: default-token-7f78p

Optional: false

QoS Class: BestEffort

Node-Selectors: <none>

Tolerations: node.kubernetes.io/disk-pressure:NoSchedule

node.kubernetes.io/memory-pressure:NoSchedule

node.kubernetes.io/not-ready:NoExecute

node.kubernetes.io/unreachable:NoExecute

Events: <none>

Name: c7n-slaver-sqp7s

Namespace: c7n-system

Node: node3/10.213.234.183

Start Time: Fri, 13 Sep 2019 22:54:05 +0800

Labels: app=c7n-slaver

c7n-usage=c7n-installer

controller-revision-hash=2464478952

pod-template-generation=1

Annotations: <none>

Status: Running

IP: 10.233.65.7

Controlled By: DaemonSet/c7n-slaver

Containers:

c7n-slaver:

Container ID: docker://fb6ab95e735bec4eb95f484f701b922b7aa0dd083d51db34d26feddf81c25762

Image: registry.cn-hangzhou.aliyuncs.com/choerodon-tools/c7n-slaver:0.1.1

Image ID: docker-pullable://registry.cn-hangzhou.aliyuncs.com/choerodon-tools/c7n-slaver@sha256:6ca1400dd1c021af6fa5ce1b846fea6142b93f8857df2114fa04a63e3c9f73a4

Ports: 9000/TCP, 9001/TCP

Host Ports: 0/TCP, 0/TCP

State: Running

Started: Sat, 14 Sep 2019 11:03:06 +0800

Ready: True

Restart Count: 0

Environment: <none>

Mounts:

/data from data (rw)

/var/run/secrets/kubernetes.io/serviceaccount from default-token-7f78p (ro)

Conditions:

Type Status

Initialized True

Ready True

PodScheduled True

Volumes:

data:

Type: PersistentVolumeClaim (a reference to a PersistentVolumeClaim in the same namespace)

ClaimName: slaver

ReadOnly: false

default-token-7f78p:

Type: Secret (a volume populated by a Secret)

SecretName: default-token-7f78p

Optional: false

QoS Class: BestEffort

Node-Selectors: <none>

Tolerations: node.kubernetes.io/disk-pressure:NoSchedule

node.kubernetes.io/memory-pressure:NoSchedule

node.kubernetes.io/not-ready:NoExecute

node.kubernetes.io/unreachable:NoExecute

Events: <none>

Name: mysql-57dfc5bd69-qq97l

Namespace: c7n-system

Node: <none>

Labels: choerodon.io/infra=mysql

choerodon.io/release=mysql

pod-template-hash=1389716825

Annotations: choerodon.io/metrics-group=mysql

choerodon.io/metrics-path=/metrics

Status: Pending

IP:

Controlled By: ReplicaSet/mysql-57dfc5bd69

Containers:

mysql:

Image: mysql:5.7.22

Port: 3306/TCP

Host Port: 0/TCP

Liveness: tcp-socket :3306 delay=60s timeout=5s period=10s #success=1 #failure=3

Environment:

MYSQL_ROOT_PASSWORD: xxxxx

TZ: Asia/Shanghai

Mounts:

/etc/mysql/conf.d/my.cnf from config-volume (rw)

/var/lib/mysql from mysql (rw)

/var/run/secrets/kubernetes.io/serviceaccount from default-token-7f78p (ro)

Conditions:

Type Status

PodScheduled False

Volumes:

mysql:

Type: PersistentVolumeClaim (a reference to a PersistentVolumeClaim in the same namespace)

ClaimName: mysql

ReadOnly: false

config-volume:

Type: ConfigMap (a volume populated by a ConfigMap)

Name: mysql-cm

Optional: false

default-token-7f78p:

Type: Secret (a volume populated by a Secret)

SecretName: default-token-7f78p

Optional: false

QoS Class: BestEffort

Node-Selectors: <none>

Tolerations: <none>

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Warning FailedScheduling 3m (x3586 over 17h) default-scheduler pod has unbound PersistentVolumeClaims (repeated 4 times)

Name: postgresql-postgresql-0

Namespace: c7n-system

Node: <none>

Labels: app=postgresql

chart=postgresql-3.18.4

controller-revision-hash=postgresql-postgresql-65c8878f95

heritage=Tiller

release=postgresql

role=master

statefulset.kubernetes.io/pod-name=postgresql-postgresql-0

Annotations: <none>

Status: Pending

IP:

Controlled By: StatefulSet/postgresql-postgresql

Init Containers:

init-chmod-data:

Image: docker.io/bitnami/minideb:latest

Port: <none>

Host Port: <none>

Command:

sh

-c

chown -R 1001:1001 /bitnami

if [ -d /bitnami/postgresql/data ]; then

chmod 0700 /bitnami/postgresql/data;

fi

Requests:

cpu: 250m

memory: 256Mi

Environment: <none>

Mounts:

/bitnami/postgresql from data (rw)

/var/run/secrets/kubernetes.io/serviceaccount from default-token-7f78p (ro)

Containers:

postgresql-postgresql:

Image: docker.io/bitnami/postgresql:9.6.11

Port: 5432/TCP

Host Port: 0/TCP

Requests:

cpu: 250m

memory: 256Mi

Liveness: exec [sh -c exec pg_isready -U "postgres" -h 127.0.0.1] delay=30s timeout=5s period=10s #success=1 #failure=6

Readiness: exec [sh -c exec pg_isready -U "postgres" -h 127.0.0.1] delay=5s timeout=5s period=10s #success=1 #failure=6

Environment:

PGDATA: /bitnami/postgresql

POSTGRES_USER: postgres

POSTGRES_PASSWORD: <set to the key 'postgresql-password' in secret 'postgresql-postgresql'> Optional: false

Mounts:

/bitnami/postgresql from data (rw)

/var/run/secrets/kubernetes.io/serviceaccount from default-token-7f78p (ro)

Conditions:

Type Status

PodScheduled False

Volumes:

data:

Type: PersistentVolumeClaim (a reference to a PersistentVolumeClaim in the same namespace)

ClaimName: postgresql

ReadOnly: false

default-token-7f78p:

Type: Secret (a volume populated by a Secret)

SecretName: default-token-7f78p

Optional: false

QoS Class: Burstable

Node-Selectors: <none>

Tolerations: <none>

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Warning FailedScheduling 4m (x3586 over 17h) default-scheduler pod has unbound PersistentVolumeClaims (repeated 4 times)

Name: redis-55567658d4-c2whd

Namespace: c7n-system

Node: node2/10.213.234.182

Start Time: Sat, 14 Sep 2019 16:15:35 +0800

Labels: choerodon.io/infra=redis

choerodon.io/release=redis

pod-template-hash=1112321480

Annotations: choerodon.io/metrics-group=redis

choerodon.io/metrics-path=/metrics

Status: Running

IP: 10.233.67.9

Controlled By: ReplicaSet/redis-55567658d4

Containers:

redis:

Container ID: docker://9cfe6d06be090982103eb912a968c000dc5bf87ab12e40c8e73a9838201869d8

Image: redis:4.0.11

Image ID: docker-pullable://redis@sha256:ee891094f0bb1a76d11cdca6e33c4fdce5cba1f13234c5896d341f6d741034b1

Port: 6379/TCP

Host Port: 0/TCP

State: Running

Started: Sat, 14 Sep 2019 16:20:17 +0800

Ready: True

Restart Count: 0

Environment: <none>

Mounts:

/data from redis (rw)

/var/run/secrets/kubernetes.io/serviceaccount from default-token-7f78p (ro)

Conditions:

Type Status

Initialized True

Ready True

PodScheduled True

Volumes:

redis:

Type: EmptyDir (a temporary directory that shares a pod's lifetime)

Medium:

default-token-7f78p:

Type: Secret (a volume populated by a Secret)

SecretName: default-token-7f78p

Optional: false

QoS Class: BestEffort

Node-Selectors: <none>

Tolerations: <none>

Events: <none>

-

原因分析:

应该是网速问题,但是过了三个小时还是卡在这里

-

疑问:

这个问题该如何解决呢